This exercise is similar to Experiment 1, but with a bit more complexity. Once again, I am creating an SSIS package & then replicating the functionality using Python for a PostgreSQL database. In Experiment 1, the task was to read from CSV file into a table, with the inclusion of a Derived Column. In Experiment 2, the data source will be an XML file & a Conditional Split/Filter will be applied.

Overview: Insert data into a table from an XML document while applying a filter.

- Create sample data from an existing table (output in XML format).

- Import into target table.

Notes:

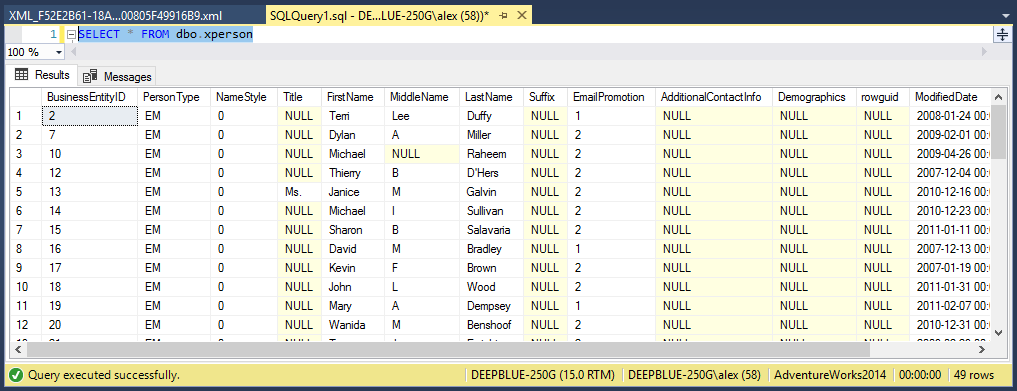

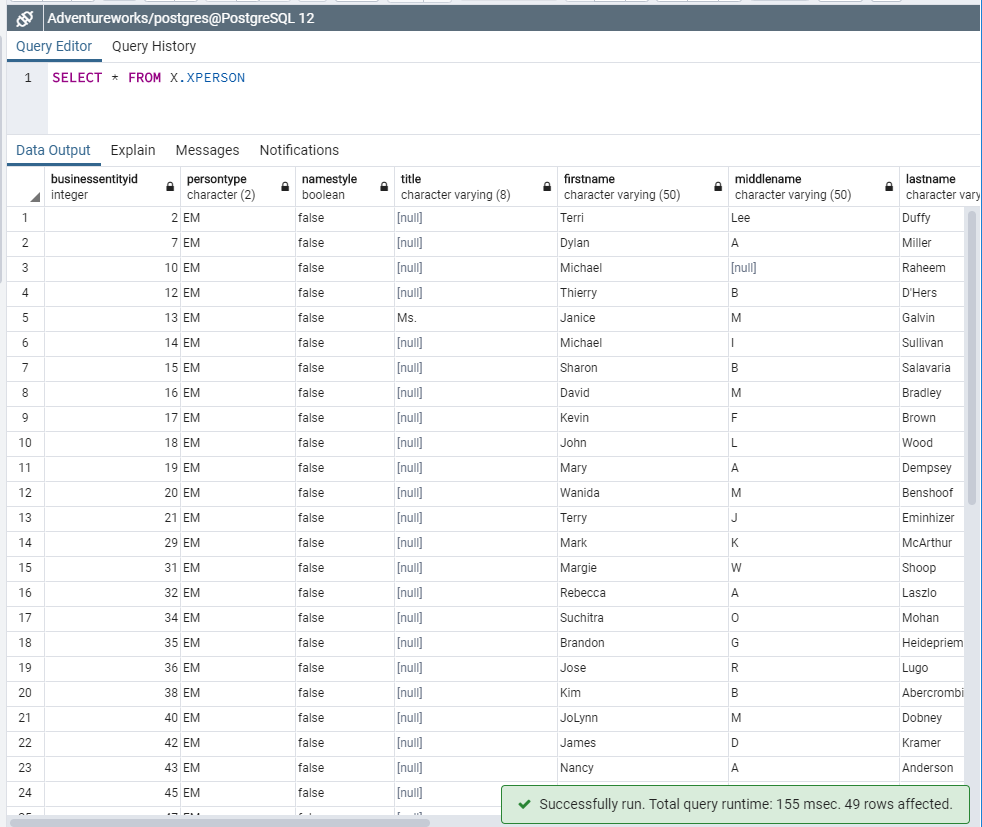

- I used the Person.Person table in the AdventureWorks2014 database in both environments.

- The sample data is the first 100 records from the table.

- The filter was applied on the EmailPromotion field. Excluding records with an EmailPromotion value of 0 left 49 records to be inserted.

Process:

Sample Data: XML Document Creation – SQL Server

SELECT TOP (100) p.businessentityid,

p.persontype,

p.namestyle,

p.title,

p.firstname,

p.middlename,

p.lastname,

p.suffix,

p.emailpromotion,

p.modifieddate

FROM Person.Person p FOR XML AUTO, ROOT('Person'), ELEMENTS XSINIL;

Note: ROOT was necessary to avoid an “XML document cannot contain multiple root level elements” xml validation error in the SSIS package.

Sample Data: XLM Document Creation – PostgreSQL

COPY

(SELECT query_to_xml

('SELECT p.businessentityid,

p.persontype,

p.namestyle,

p.title,

p.firstname,

p.middlename,

p.lastname,

p.suffix,

p.emailpromotion,

p.modifieddate FROM Person.Person p LIMIT 100', TRUE, FALSE, '')

)

TO 'C:\quick\DBExports\PersonTop100_Postgres.xml';

Note: Note the use of the “query_to_xml” function. Additional documentation can be found here.

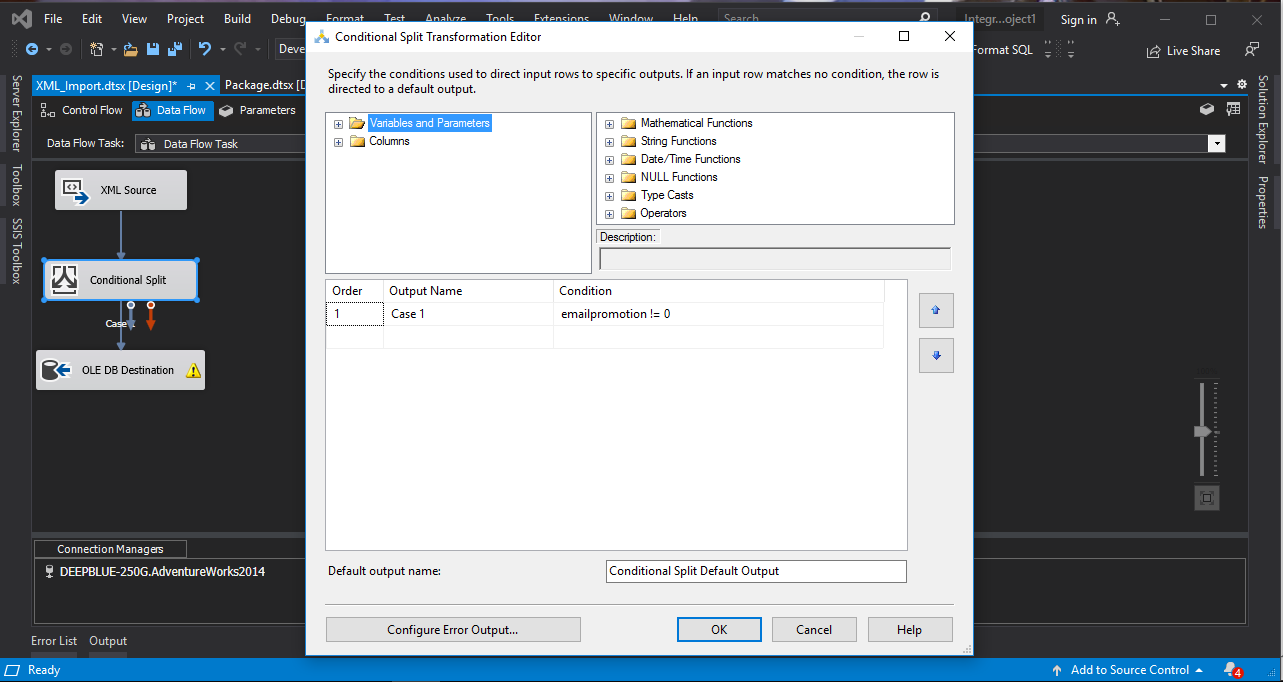

SSIS Package:

The SSIS package consisted of the:

- Connection Manager to the Database

- Data Flow with:

- an XML Source

- a Conditional Split

- an OLE DB Destination

The condition for the Conditional Split:

emailpromotion != 0

The package ran successfully, resulting in the output below.

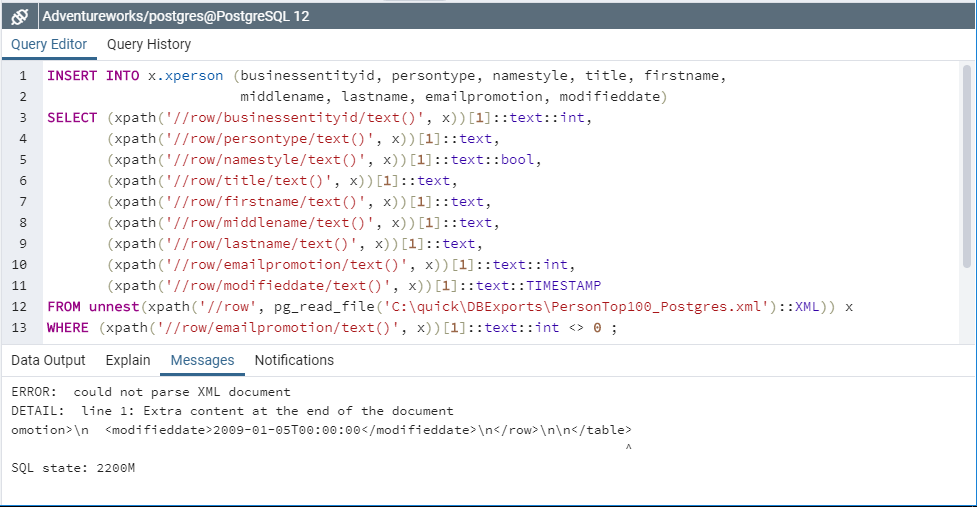

Python/PostgreSQL:

- XPath was used to access the desired nodes from the XML document.

- Filtering was accomplished by adding a WHERE clause.

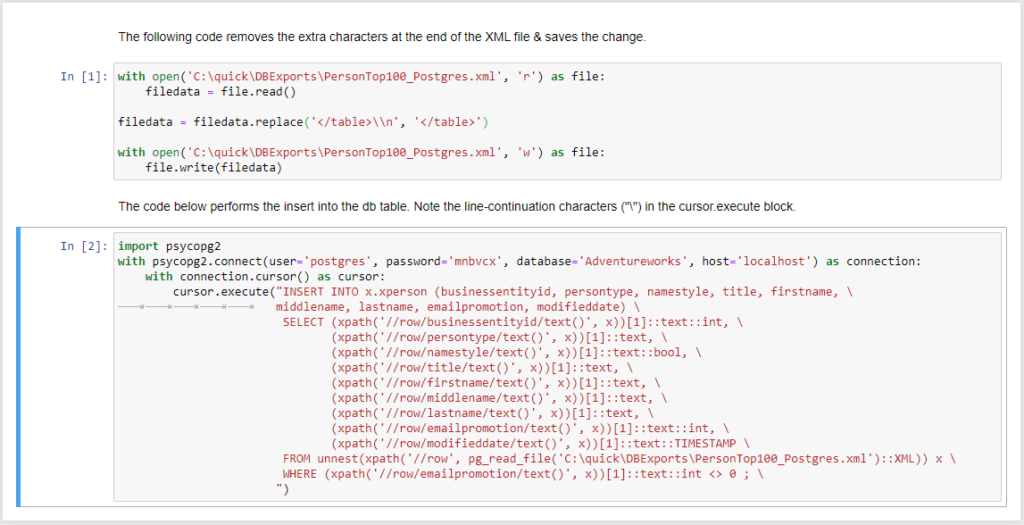

- The XML document had an extra line at the end of the file; specifically, the file terminated with “</table>\n”. The additional “\n” caused an error when attempting to import the data.

The Python code consists of 2 blocks; one to remove the extra line & the other to write the data to the table.

with open('C:\quick\DBExports\PersonTop100_Postgres.xml', 'r') as file:

filedata = file.read()

filedata = filedata.replace('</table>\\n', '</table>')

with open('C:\quick\DBExports\PersonTop100_Postgres.xml', 'w') as file:

file.write(filedata)

import psycopg2

with psycopg2.connect(user='postgres', password='mnbvcx', database='Adventureworks', host='localhost') as connection:

with connection.cursor() as cursor:

cursor.execute("INSERT INTO x.xperson (businessentityid, persontype, namestyle, title, firstname, \

middlename, lastname, emailpromotion, modifieddate) \

SELECT (xpath('//row/businessentityid/text()', x))[1]::text::int, \

(xpath('//row/persontype/text()', x))[1]::text, \

(xpath('//row/namestyle/text()', x))[1]::text::bool, \

(xpath('//row/title/text()', x))[1]::text, \

(xpath('//row/firstname/text()', x))[1]::text, \

(xpath('//row/middlename/text()', x))[1]::text, \

(xpath('//row/lastname/text()', x))[1]::text, \

(xpath('//row/emailpromotion/text()', x))[1]::text::int, \

(xpath('//row/modifieddate/text()', x))[1]::text::TIMESTAMP \

FROM unnest(xpath('//row', pg_read_file('C:\quick\DBExports\PersonTop100_Postgres.xml')::XML)) x \

WHERE (xpath('//row/emailpromotion/text()', x))[1]::text::int <> 0 ; \

")

The code ran successfully. The results can be seen below.

Operations:

- Query output to XML format

- In Postgres, this involved the “query_to_xml” function

- Import to tables from XML document – with filtering

- Selecting XML nodes with XPath

- Reading & writing to files

Closing:

- If you have thoughts, tips, tricks, corrections, etc., please leave a comment below.

- If you have an questions, please feel free to ask in the comments & I will be happy to follow up.